Updated on August 29. 2022.

Overview

Glint’s 360 Feedback program is designed to help employees become more effective by better understanding how their own perceptions of their strengths and opportunities match or differ from how others perceive them, as well as nudging them to take action on the feedback by creating a development goal. Glint 360 is recommended to use alongside Glint Engage and Glint Perform but also stands on its own.

General FAQs

Q: What are 360s?

A: Organizations use 360-degree feedback (a.k.a. multi-rater feedback, multi-source feedback, or multi-source assessment) as a way to provide their people with insight into their strengths and opportunities, from a variety of viewpoints. Rather than relying solely on the perspective of an employee’s immediate supervisor, organizations use the 360 process to capture input from the person being reviewed as well as their direct reports and colleagues. This approach provides a robust and accurate view of the employee’s strengths and opportunities. When collected and applied in the right way, 360s can provide employees with tremendous value, such as increased self-awareness, enhanced performance, opportunities to learn and grow, and can help organizations achieve their business and engagement goals.

Q: What are cases for 360s?

A: Glint recommends the following use cases:

- High-potential/high-impact development

- Point-in-time development

- Event-based development

Q: Should 360 input be used to inform performance decisions?

A: Glint supports the concept of using multiple points of view to understand an individual’s performance and does not advocate for 360s alone to be used to make performance decisions. We take the position that they should be for development purposes for the following reasons:

- Ensuring 360s are developmentally-focused helps increase candor and reduce bias from colleagues trying to influence the outcomes of the feedback in favor of or against the participant.

- When 360s are used for performance evaluation, some may try to “game the system” in their own favor by providing overly negative feedback about a coworker up for the same promotion opportunity.

- Worse yet, 360s should not be used as a punitive tool to weed out low performers from organizations.

Q: How were Glint's Manager 360 items/questions developed?

A: A team of Glint People Scientists and I/O Psychologists conducted an extensive study of the academic research, commercial 360 models, and organizational competency models to identify 319 unique work behaviors that form the basis for a Glint Manager 360.

The 300+ behaviors were then content-analyzed and coded into a total of 17 clusters. Each behavior within the cluster was then reviewed for quality and conceptual overlap, and narrowed down to a list of 73 behaviors across the clusters.

A validation study was then conducted using these 73 behaviors and multiple scale variations to establish internal consistency, discriminant ability, and relationship to six overall outcome measures (overall satisfaction, likelihood of recommending, choosing to work with the person again, overall performance, and comparison of performance to others). A combination of descriptive statistics, correlations, factor analyses, decisions trees, and expert judgment were then used to identify the most relevant behaviors for managerial success. To see our list of recommended questions, along with alternate questions, please see this document.

Q: How can we ensure 360s are successful?

A: With careful planning and implementation, we can help you get it right.

On its face, the 360s process appears simple enough to execute. Yet the traditional way 360s are administered has often failed to achieve the desired results. To ensure success, the 360s process should be:

- Intentional: Clearly define the purpose, directly align the process to actual business needs, key organizational behaviors, and/or cultural values.

- Relevant: Both in content and in timing, the process needs to be relevant to the employee. Topics covered should be directly applicable to the employee’s job and aligned to their development journey.

- Supportive: Be prepared to provide support to both the raters and the participant. For 360s to be useful, the right raters need to be selected and guided on providing effective feedback. Guidance for 360 participants is also recommended to ensure they are clear on the purpose of the 360, understand how to read their report, and are aligned with expectations for application and follow-through.

- Ongoing: Lack of participant follow-through is often cited as one of the biggest barriers to 360 success (Bracken, Rose, & Church, 2016). To combat that and instead encourage accountability, integrate developmental goals into other organizational practices, such as quarterly goal discussions. To help focus the participant on the importance of follow-through, ensure their manager and senior leaders actively support their development plan.

Q: What email address does the survey come from?

A: The email Sender Email Address is survey@mail.glintinc.com

Q: Can I start my feedback and complete it later?

A: Yes, Glint feedback surveys will automatically save your answers. Select the link from the original invitation to review your feedback email or access it from the “Feedback” tab within Glint to complete it anytime before the end date.

Q: Which browsers are supported?

A: Glint 360 was optimized for Chrome but will work with most browsers.

Q; Who do I contact for support?

For phone support:

US Toll-free (888) 331-4495

US (650) 817-7297

UK +44 (800)368-8224

Japan local +81345809725

Q: Can we edit a 360 cycle after it has started?

A: This is not currently supported. After a 360 cycle has started, no subjects can be added.

Only the following admin actions are allowed:

- Add email reminders

- Extend end date for any subject

- Remove subjects

- Add raters

- Remove raters who have not responded

- Add or change a subject’s coach

- Move a rater from one rater category to another

- Edit the Intro/Thank You text

Q: How can I login to Glint to see my feedback information?

A: You can access Glint with this link:

This link will ask you for your email address and company ID. If you are using this FAQ internally, replace this with your SSO URL or use your company’s internal instructions for accessing Glint.

Q: What can I see in the Feedback tab?

A: After you log into Glint and select the “Feedback” tab, you will be able to see:

“My Feedback”

- “In Progress”

- All of your active feedback requests such as your 360 Feedback cycle.

- If you select your 360 Feedback card, you can:

- Add raters.

- Complete your self-assessment.

- Delete raters who have not yet responded.

- View who has responded to your 360 cycle (depending on how your admin has set up the 360).

- “Feedback History”

- All of your completed feedback.

- Select your 360 Feedback card to access your 360 report.

A report will only be available if you completed your self-assessment and the confidentiality threshold for at least one of your rater categories was met. In this case, there will be a “Report Not Generated” note on your 360 Feedback card.

“Feedback Requests”

- “To Do”

- Open requests for you to provide feedback on a colleague.

- “History”

- Completed or expired requests for you to provide feedback.

Admin FAQs

Q: How long is a 360 cycle?

A: The timeline of a 360 cycle is customized for each customer at the cycle level. A company can have more than one timeline running concurrently if they have multiple cycles running concurrently.

Glint follows these guidelines:

- Cycle Start Date [subject invited] :

- Determined by the 360 administrator.

- Rater Selection End Date

- 1-2 weeks from Cycle Start Date

- This is a soft deadline so we have a date (to work backwards from) for the rater selection reminder email, but note that raters can be added until the end date.

- Cycle Close Date

- 2-3 weeks after rater Selection End Date

Q: Who do we let know if there is a specific feature that we think would be beneficial to add to 360?

A: We welcome your feedback. Please feel free to share it directly with your CSM or send your feedback to support@glintinc.com .

Q: What types of questions can we include in the 360 assessment?

A: Questions for raters can be either scaled or open-ended. Scaled questions also come with the option to turn on comments so the rater may provide additional information. We currently support 5-point and 7-point scales.

Q: Can we make certain questions optional or mandatory?

A: Yes, you can make a question optional in the survey builder with a single click. We recommend that all scaled questions be optional so that a feedback provider can skip it if they don’t believe they have enough information to make an assessment.

Q: Can we have different questions (and/or competencies) for different orgs, levels, ICs. vs. Managers?

A: Yes, you can have different questions/competencies for different roles. These would be set up as separate 360 programs; for example, one for managers and another for executives. Within one 360 cycle, however, subjects and feedback providers will all be asked the same questions.

Q: Are questions for different roles pre-populated by HRIS data?

A: No, different questions/competencies cannot be pre-populated based on HRIS data.

Q: Can we have different scale anchors/descriptions for different orgs, levels, ICs. vs. Managers (all pre-populated based on HRIS data)?

A: Yes, you can have different scale anchors/descriptions for each 360 program (example: one program for managers and another for executives), and these programs can run simultaneously. Within one 360 cycle, however, the same scale anchors/descriptions must be used for all scaled questions. The scale anchors/description are not pre-populated based on HRIS data.

Q: For scaled questions, what is the maximum number of scale points (i.e., 5-point scale vs. 7-point scale)?

A: You can select either 5-point or 7-point scales; Glint doesn't recommend anything higher.

Q: In reporting, will scaled scores be transformed to a 100-point scale as they are in Engage?

A: No, the scores remain on a 5- or 7-point scale because ideally each point corresponds to a specific assessment. For example, Glint recommends using a 5-point scale, with the following recommended, default labels:

1 - Major Opportunity

2 - Opportunity

3 - Competent

4 - Strength

5 - Major Strength

Q: For rating scales, can we include a “No Opportunity to Observe” option that won't be factored into scores?

A: Not in the rating scale itself, but you can make a question optional and direct raters to skip a question if they don’t feel they have enough information to make an assessment.

Q: Can you add open-ended comments to a question that is not open-ended (like in Engage)?

A: Yes, you can turn on optional comments.

Q: Is there a minimum or maximum number of questions that can be included?

A: No, but to create focus and encourage high-quality feedback, we recommend your 360 survey include a maximum of 10-20 questions, including 2-3 open-ended questions.

Note that in our 360 report, we show the top 3 relative strengths, as well as the top 3 relative opportunities for a subject, and these are based on the “All But Self” average score of scaled questions that are mapped to a competency. So, we recommend having a minimum of 6 scaled questions that are mapped to a competency in your survey.

As reference, the Glint Manager 360 survey has 18 questions total: 16 scaled questions that are mapped to 13 competencies and 2 open-ended questions.

Q: Is there a max number of 360s we can launch?

A: No, we provide unlimited access to 360s. You can also run multiple 360 programs in parallel where the number and content of survey questions differ (example: one for managers and a more in-depth one for executives).

Q: Can a manager set up a 360 on behalf of a direct report? Can an HRBP set up a 360 on behalf of a leader?

A: An admin/HRBP can set up a 360, but a manager cannot.

The manager would need an admin login or access to do so, which we don’t recommend. An admin/HRBP can also set up the entire 360 process for a busy leader to minimize the work needed by the executive; the admin/HRBP would select all their raters and set up the automated reminders for raters to provide feedback.

Q: Would the HRBP be the ‘coach’ in the system? We would not want to grant HRBPs admin access, but would want to enable them to launch 360s on behalf of the leaders they support.

A: Only admins can launch 360s. Anyone in your HRIS system can be designated as a subject’s coach and be notified by email when one of their subject’s 360 reports is available.

Q: What types of employees would be considered ‘coaches’ in the program?

A: It is up to your organization and who you want to have access to a 360 subject’s report. Often, a manager is also the coach. It’s best practice that they receive some training to understand the 360 report and how to have a coaching session with their direct report about the results. The subject, coach and manager can be configured to automatically get the subject’s report when it’s ready, with no additional effort needed by you.

Q: If we make all managers coaches, can they kick off 360’s for their direct reports without an admin?

A: Coaches cannot launch 360s. To better maintain governance over 360 programs, only admins have this level of access.

Q: Can an employee set up their own 360?

A: No. An employee can select their own raters, but they can’t self-select to get a 360. If an employee has been added by an admin as a subject of 360, once the 360 cycle goes live, the employee will get an email letting them know that they’re part of a 360 cycle, prompting them to select their raters and complete their self-assessment.

Q: Is it possible to configure an option to have the 360 subject not know about the 360?

A: No, since we have designed the 360 report to help subjects understand how their own perception of their strengths and opportunities matches or differs from others, we require the subject to complete their self-assessment in order for a 360 report to be generated.

Q: Can an IC be a subject for the 360 feedback?

A: Yes, you can turn off the Direct Reports category for all subjects in a 360 cycle so that an IC can become a subject. Also, a 360 cycle can run with a mix of ICs and managers, along with the added instruction that ICs can skip adding “Direct Reports” raters. Our 360 report is built so that if no responses have been received for one rater category, then that rater category is automatically excluded from the report.

Q: Can the 360 be kicked off without the self-assessment?

A: No, the self-assessment is required. The 360 report will only be generated if the subject has completed their self-assessment and the confidentiality threshold has been met for at least one rater category.

Q: Is omitting the self-assessment a possibility in the future?

A: This isn’t how we intended 360s to be used, so this isn’t on the roadmap.

Q: Can we see aggregate scores - trending over time - in the product dashboard?

A: No, not in the product dashboard. However, after the 360 cycle closes, you can export all rating and comments data for subjects for whom a 360 report was generated into a CSV file to do aggregate analysis .

Q: Might aggregate scores - trending over time - eventually be available?

A: This is on our longer-term roadmap.

Q: Can we apply filters to aggregate scores?

A: No, because we do not currently show aggregate 360 scores in the product.

Q: How do HRBPs and others find out when a 360 report for one of their subjects is ready?

A: We send emails to coaches, managers, and subjects when the subject’s 360 report is ready and they have been granted access by the admin. If the HRBP is set as the coach, then they’ll get the email when a subject’s report is available.

Q: Can a manager see results first, and then communicate to their direct reports?

A: Yes, the admin can configure this in the report delivery page. The admin decides whether the subject, manager, and coach receive access to a subject’s report and when that access is granted.

Q: For rater categories which have a minimum confidentiality threshold of 3 or higher, is the confidentiality threshold applied at the question-level?

A: No, we apply the confidentiality threshold at the survey-level. All ratings and comments for a rater category are shown in aggregate, provided the confidentiality threshold has been met (for example, if the confidentiality threshold is 3 for direct reports and 3 for collaborators). But if a subject has 3 peers and 2 collaborators respond, only the aggregated feedback from peers is included in that subject’s 360 report. If one of the peers skipped one question, the rating and/or feedback for peers is still shown in aggregate for that question.

Q: Is the SFTP / HRIS integration the same as Engage?

A: Yes.

Q: How do we generate raw exports of results? Will there be a limit to the number of exports we can do?

A: Admins can download a CSV file of all the rating and open-ended feedback for subjects for whom a 360 report was generated in each cycle. There is no limit to the number of exports.

Q: Can we aggregate manager reports, like in Engage, so a leader can see all their directs and their skip level directs’ reports?

A: No, 360 subject reports are not aggregated and reports aren’t automatically provided to managers or skip level managers. The Administrator can release a 360 report to a manager, and a skip level manager can be selected as a coach; however, the subject's hierarchy isn’t automatically granted access.

Q: Can we auto-populate HRBP as internal coaches?

A: Not currently, but we are actively building the ability to bulk upload subjects and coaches (target timing: Summer 2020) and bulk extend due dates (target timing: Fall 2020).

Q: Can we change the internal coach label to HRBP?

A: No, the subject, Manager, and coach labels cannot be changed. However, those labels are only visible to the admin. Additionally, 360 emails and the 360 overview page seen by the subject can be edited to refer to coaches as HRBPs.

Q: Can HRBPs have automatic access to view all 360 reports for anyone in the org they support?

A: Yes, if HRBPs have admin access/permissions.

Q: Can we separate admin access to Engage data?

A: Yes, we can separate 360s admin from Engage admin.

Subject FAQs

Q: If the individuals pre-populated into my rater categories are incorrect, can they be corrected?

A: Yes, when reviewing and selecting raters, you can add, remove, and update the individuals listed as necessary. Once you invite the raters, an email will be sent to them to invite them to provide feedback.

Q: Is there a limit to the number of Raters I can request?

A: There is no limit to the number of Raters that can be requested, but note that the minimum threshold for all rater groups is three (3) in order for that group’s data to display (with the exception of the manager group- this threshold is one (1)).

Glint’s People Science POV: “We believe that the leader should include as many Raters as possible who are actually in a position to provide valuable feedback on how you show up at work.Providing a recommendation can sometimes be difficult because if we say 8-10 raters per group and they only have 4 direct reports then we would rather them keep it to 4 for that group instead of inviting people that aren’t able to provide valuable feedback just to meet our recommendation."

Q: How can I get to my 360 report other than by using the link in the email letting me know my report is available?

A: Subjects can see their 360 report by logging into Glint and selecting the “Feedback” tab. Subjects can see a 360 feedback card in the “My Feedback > Feedback History” section. Selecting the card takes them to their 360 report.

Q: How can managers see the reports/results for their direct reports?

A: If a manager has been granted access to see the results for a direct report, they will receive an email with a link to the report.

Alternatively, they can also access it through the Glint Dashboard by either of the following paths:

Glint Dashboard > Search Icon > Search for Direct Report > Within the Direct Report's Card, choose the 'Feedback' tab

Glint Dashboard > Focus Areas > 'My Directs' > Choose Direct Report > Within the Direct Report's Card, choose the 'Feedback' tab

Q: How can I tell how many raters responded?

A: While a 360 cycle is live, log into Glint, select the “Feedback” tab, and select the 360 feedback card in “My Feedback” > “In Progress”. Select Step 1 to see a list of invited raters and who has responded. In addition, Glint will send you an email shortly before the 360 cycle ends with a list of raters who haven’t responded.

After the 360 cycle is complete and you’ve received access to your 360 report, on the “Insights” tab, you can click the “Response Rate” box to see a list of all raters who responded.

Q: What are the various options for establishing strengths and opportunities? Are they based on a particular rating threshold or could they be relative to one’s own scores?

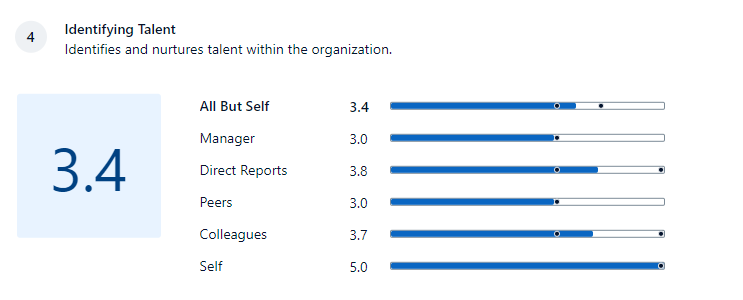

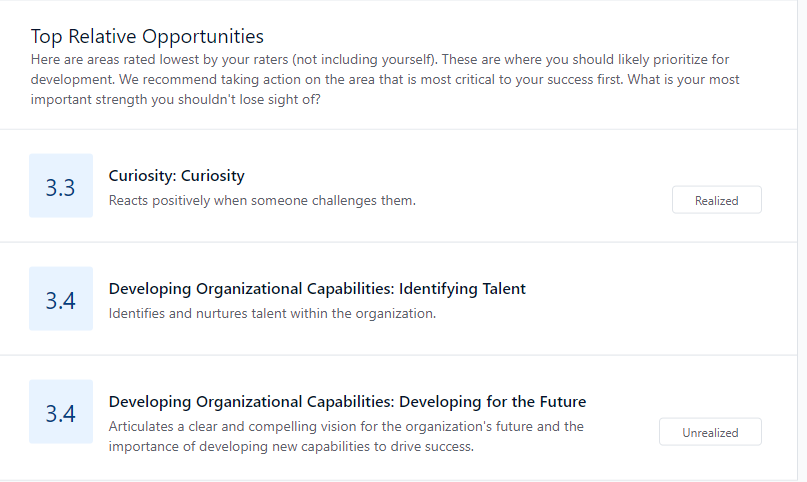

A: For each scaled question mapped to a competency, we categorize it into a strength or weakness based on the “All But Self” average score which is the average of each rater categories’ score, except for the subject. If a 5-point scale is being used, a behavior is considered to be a strength if the “All But Self” score is between 3-5 and an opportunity if it is below 3.

Strengths and opportunities are generally labeled as “realized” if the difference between the “All But Self” score and the “Self” score is between +/- 1 because it’s an indication that the subject’s self-perception is close to others.

Strengths and opportunities are generally labeled “unrealized” if the difference is less than -1 or greater than +1 because it’s an indication that the subject’s self-perception is different from the others.

Q: What are the white dots in the bar charts in the 360 report?

A: White dots represent the high and low scores from individual raters in that rater category so that the range in scores can be seen. Hover over the bar to see the low, high, and average scores.

Q: How is “Gap” computed?

A: The distance score is the difference between the “All But Self” score and “Self” score.

Q: Can an individual set a specific numeric goal for each competency? For example, improve this score by 0.2?

A: Yes. Development goals can be created directly from a 360 report. Once in the Focus Areas section, an individual can create that numeric goal for themselves under Key Results.

Coach FAQs

Q: Is there any way to compile rater information to look at rater tendencies (e.g., leniency, stringency)?

A: Yes, on the “All Responses” tab of the 360 report, you can choose whether you want to see scores for all scaled questions mapped to a competency across one, multiple, or all rater categories to find trends.

You can see the rater group tendency (not individual rater tendency) by viewing the “All Responses” tab of the 360 report. Here, you can see scores for all scaled items mapped to a competency across one, multiple, or all rater categories to help you find trends. You can also view the range of scores provided on a competency or question by rater group in various sections of the report.